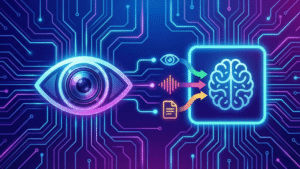

Artificial intelligence is no longer limited to processing a single stream of information. Today’s most advanced systems interpret images, understand language, analyze sound, and combine all of it into unified decision-making. At the core of this evolution sits the modern computer vision framework.

These frameworks give machines the ability to interpret visual input with remarkable accuracy. When paired with language and audio models, they enable AI systems that operate with deeper awareness and broader context. Industries such as healthcare, transportation, retail, and security are already benefiting from this convergence.

To understand the impact, it helps to look at how computer vision fits into the larger multi modal landscape.

From Visual Recognition to Context-Aware Intelligence

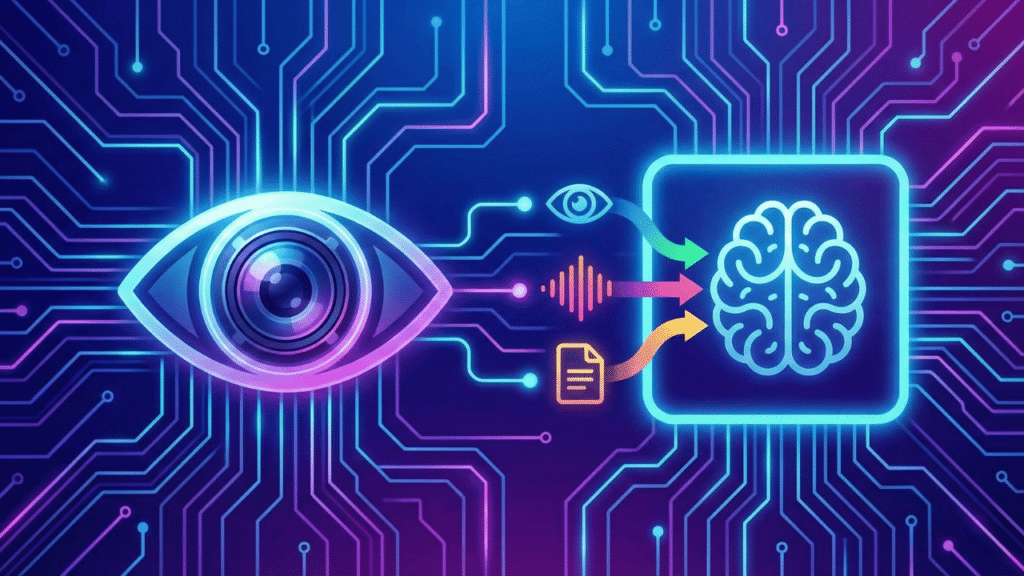

Computer vision focuses on enabling machines to analyze and interpret images and video. It allows AI to detect objects, classify scenes, track motion, and extract meaningful patterns from visual content.

Multi-modal AI expands this capability by combining visual information with other inputs such as text and audio. Instead of treating each data type separately, these systems integrate them into a single intelligent pipeline.

Consider an autonomous vehicle. Visual models identify pedestrians and traffic signals. Sensor data measures distance and speed. Navigation systems interpret mapping information. Together, these inputs create a coordinated and reliable driving experience.

This layered approach leads to smarter and more adaptable systems. Computer vision provides the visual backbone that makes this integration possible.

Why Frameworks Matter in AI Development

A computer vision framework does more than process images. It provides structured tools, optimized algorithms, and reusable components that accelerate development.

Rather than building models from scratch, engineers can rely on established libraries that support tasks such as:

- Object detection

- Image segmentation

- Facial recognition

- Video tracking

- Scene analysis

These frameworks reduce complexity and shorten development cycles. They also allow teams to focus on system design and integration instead of reinventing core functionality.

In multimodal environments, this efficiency becomes even more important. Visual data must align seamlessly with text embeddings, speech recognition outputs, and structured datasets. A reliable framework simplifies that integration.

Leading Libraries That Support Multi Modal Systems

Several widely adopted tools have become essential in visual AI development. Each offers strengths that contribute to building sophisticated multimodal applications.

OpenCV

OpenCV remains one of the most established libraries for image and video processing. It offers a broad set of functions for feature detection, object recognition, and real-time analysis.

PyTorch

PyTorch is popular in research and production environments for its flexibility and dynamic computation model. It supports rapid experimentation and is widely used for training deep vision networks.

TensorFlow

TensorFlow provides scalable infrastructure for training and deploying machine learning models. It integrates well with computer vision pipelines and supports production-level deployments.

Savant AI

Savant AI focuses on high-performance video and image analytics. It is particularly well-suited for real-time detection and tracking use cases where efficiency is critical.

Selecting the right combination depends on the project’s objectives, data complexity, and deployment requirements.

Designing a Multi Modal System with Computer Vision

Integrating a computer vision framework into a multimodal architecture requires careful planning. Success depends on alignment between components and clarity in system design.

1. Define Data Sources and Objectives

Identify the types of inputs involved, whether images, video streams, written content, or audio signals. Each modality should serve a clear purpose within the system.

2. Standardize Data Processing

Different data types must be converted into compatible representations. Image tensors, text embeddings, and audio features should align within the same modeling pipeline.

3. Select the Core Framework

Choose a computer vision library that matches performance expectations and integration needs. Consider compatibility with existing machine learning infrastructure.

4. Optimize for Performance

Real time systems often require GPU acceleration or distributed computing. Techniques such as model compression and parallel processing can improve efficiency.

5. Validate and Iterate

Test synchronization across modalities. Ensure predictions remain consistent when visual and non-visual data interact.

This structured approach helps transform individual models into a cohesive multimodal system.

Addressing Common Integration Challenges

Building multimodal AI is rewarding but technically demanding. Developers often encounter challenges such as:

- Inconsistent data quality across modalities

- Timing mismatches between audio and visual inputs

- Model explainability concerns

- Increased computational requirements

A well-designed computer vision framework reduces many of these risks. Strong preprocessing tools, robust feature extraction methods, and scalable deployment options create stability across the entire pipeline.

Equally important is maintaining transparency in model outputs. As AI systems grow more complex, interpretability becomes essential for trust and regulatory compliance.

The Expanding Role of Computer Vision in AI Innovation

Multi modal AI represents a significant step forward in machine intelligence. Instead of operating in isolation, models now collaborate across data types to deliver richer insights.

Computer vision frameworks make this collaboration possible. They serve as the foundation that transforms raw pixels into structured information ready to be combined with language models, audio processors, and predictive analytics systems.

As organizations continue investing in AI-driven automation and decision support, the demand for scalable and reliable vision frameworks will increase. Developers who understand how to integrate these tools effectively will unlock new levels of performance and innovation.

The future of artificial intelligence lies in systems that see, listen, read, and reason together. Computer vision frameworks are central to making that future a reality.