OpenAI’s most awaited multimodal feature for GPT-4, which allows users to upload images and ask related questions, hasn’t been released yet. However, Microsoft has taken the lead and provided early access to a similar image upload feature in Bing Chat, where you can now interact with the GPT-4 model. It operates just like OpenAI demonstrated during the GPT-4 launch.

This cutting-edge capability holds immense potential across various fields. Whether you’re a student, professional, or AI enthusiast, you can leverage this feature to enhance your interactions with the AI and improve decision-making processes.

How to Use GPT-4 in Bing Chat with Multimodal Feature (Right now)

In case, you’re curious to learn how you can use GPT-4 with a multimodal feature in Bing Chat, then below in this blog post, you will learn step-by-step instructions on how to do that:

Note – Bing AI Chat mode is exclusive to Microsoft Edge. In case, you’re Chrome, then you may find this blog post useful: How to Use New Bing Chat Mode in Google Chrome

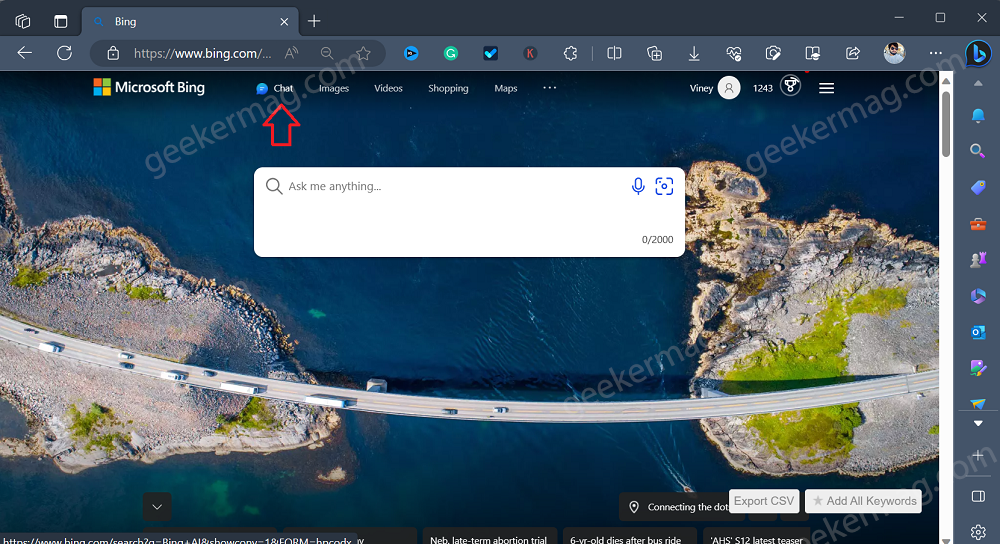

To begin, open Microsoft Edge and visit Bing.com. You can access this feature on your smartphone using the Bing app, available for both Android and iOS devices. Alternatively, you can also use GPT-4 in Bing Chat from Microsoft Edge Sidebar.

On the top of the page, click on the Chat option. This will take you to Bing AI Chat mode page.

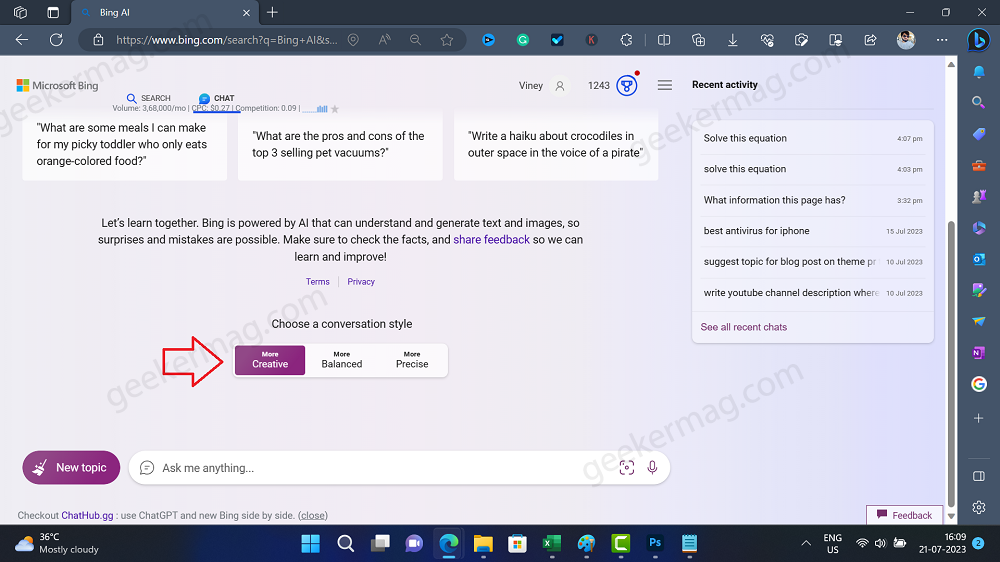

On this page, you need to scroll down to Choose a conversation style section and switch to Creative mode. This mode will allow you to chat with GPT-4 Multimodel feature for free.

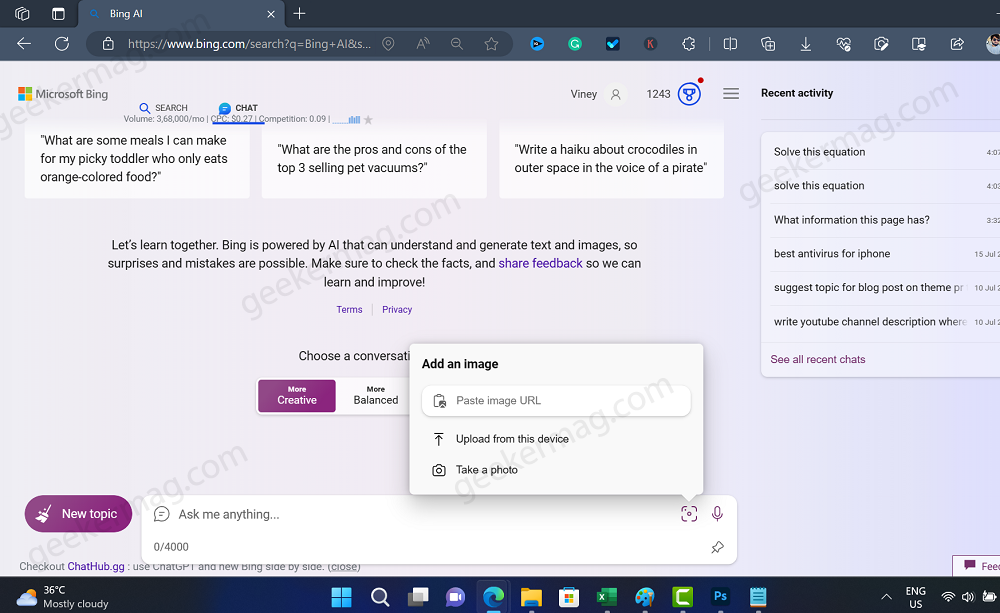

In the Ask me anything box, you need to click on the Add a image icon. This will open a menu featuring three options: Paste image URL, Upload from this device, and Take a photo. Depending on your choice, select one of the options and upload the image.

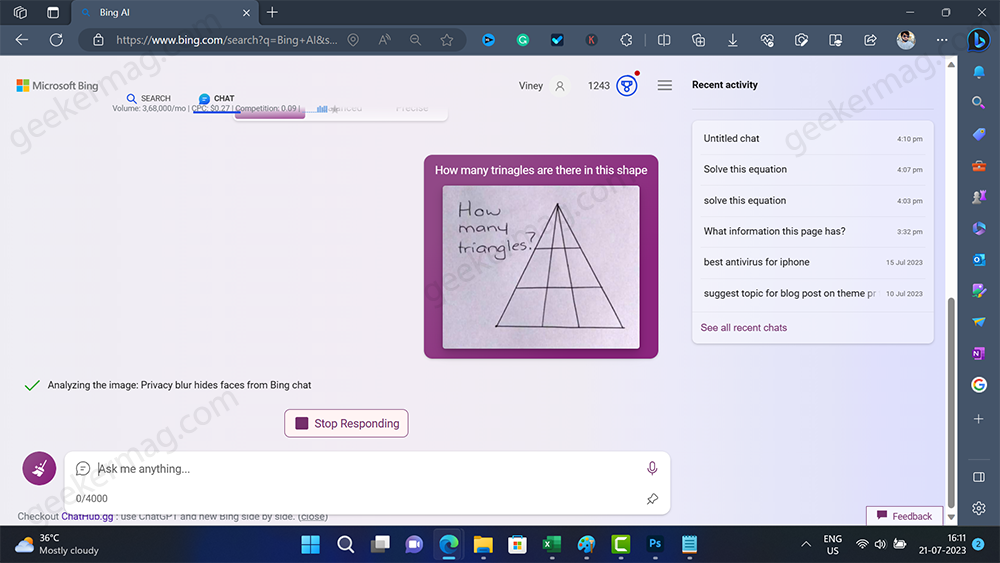

After the image is uploaded, type your query, and this will answer your query.

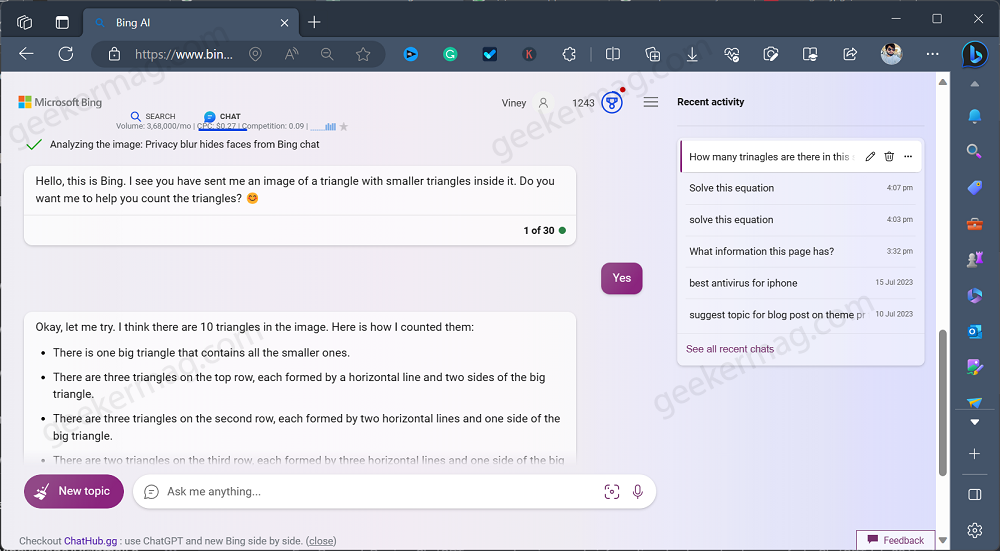

To test the accuracy of the feature, I tested the following example: I uploaded the image having triangles and then asked the question of how many triangles are there, surprisingly it not only detected the image but answer it as well.

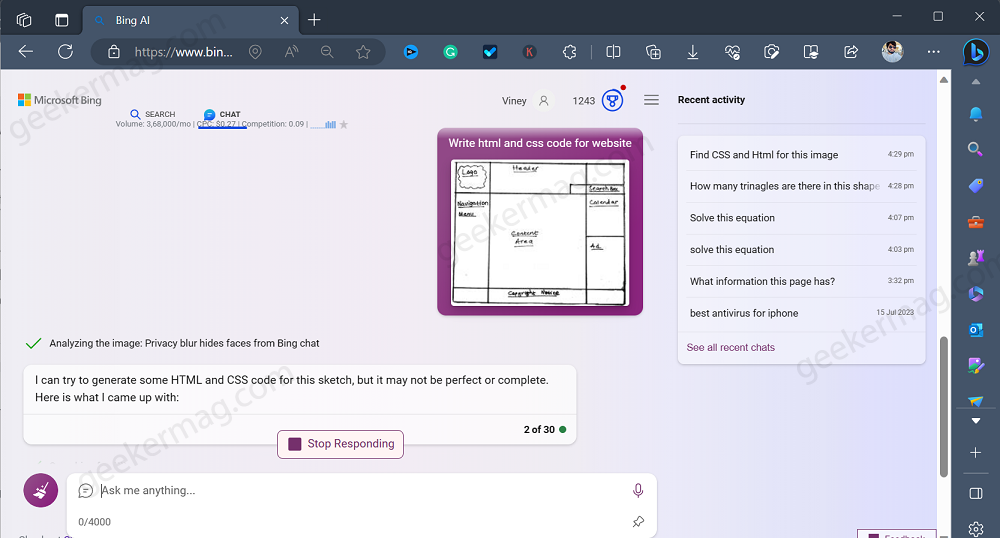

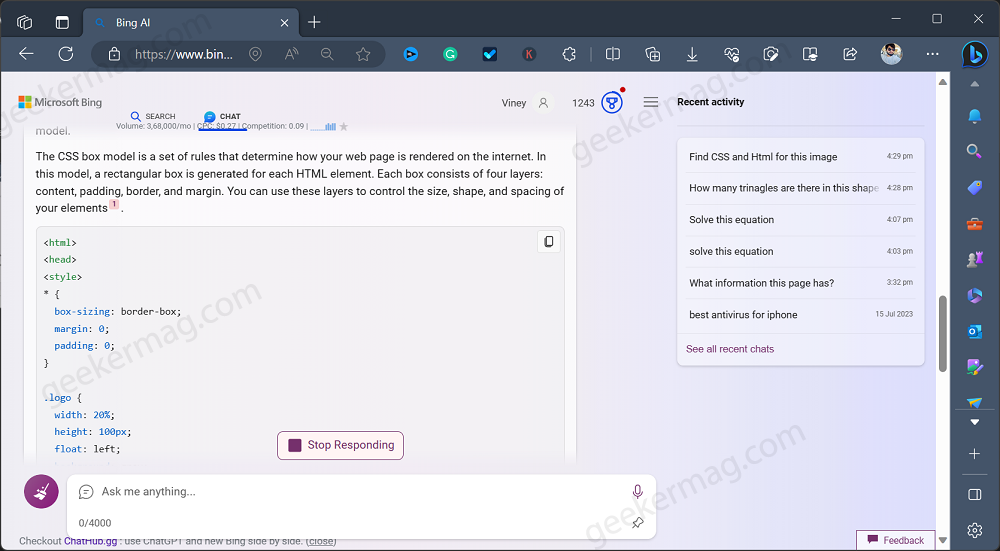

In another example, I upload an image with a rough design of the website and asked for CSS and HTML code, here’s the result.

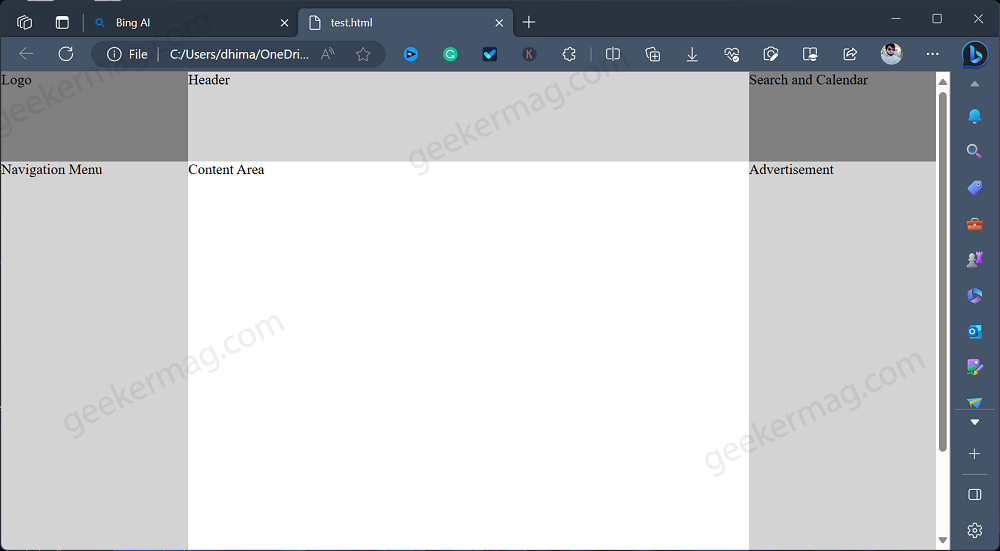

Shockingly when I created an HTML file with the same code, this is how it looks like:

Similarly, there are endless possibilities that you can try with Bing Chat powered by GPT-4. By following these steps you can use GPT-4 in Bing Chat with the Multimodal feature (Right now).

Ready to take your AI interactions to the next level with GPT-4’s groundbreaking multimodal feature in Bing Chat? Start exploring the endless possibilities of image uploads and related questions today!

Check YouTube Video:

Frequently Asked Questions (FAQs)

Can you get chat GPT 4 for free?

Yes, Microsoft has taken the lead and provided early access to a similar image upload feature in Bing Chat, where you can now interact with the GPT-4 model.

Can GPT-4 understand and generate responses based on images or videos in Bing Chat?

GPT-4 can understand and respond to images or videos in Bing Chat due to its Multimodal capabilities, which enable it to process both textual and visual inputs effectively. This makes GPT-4 more versatile and adept at comprehending user queries.

What are the potential benefits of using GPT-4 in Bing Chat with Multimodal capabilities?

GPT-4 in Bing Chat with Multimodal capabilities offers a dynamic user experience, understanding both text and visual inputs. This enhances responses’ accuracy and relevance, making interactions more engaging and personalized.

2 Comments

Too bad it didn’t count the triangles correctly, though. I see at least 19, though I’m still pre-coffee and there may be more.

Thanks for your response, it also mention that information isnt correct all the time.

We should not forget the its AI which is still learning 😉